Choosing cabling infrastructure for greenfield data centers

Designers should anticipate higher data rates and longer distances down the line

Despite the economic volatility of recent years, demand for supporting digital business infrastructure continues to increase. While new data center construction slowed down initially during the global pandemic, it was followed by robust expansion with demand for capacity more than tripling year over year in the first half of 2022 in North America, according to the commercial real estate analyst CBRE. Much of this new global data center, or “greenfield,” construction comes from hyperscale data centers, although the market has seen a resurgence in enterprise demand too.

With these greenfield data center projects, network designers may be starting from scratch with only an empty room or just a concrete foundation. This means a significantly higher upfront investment is required compared to updating an existing network, but there's also the opportunity to create the right network architecture from the start — the only real constraints are size, power, and money. That differs from brownfield data center upgrades, which face added constraints from the network infrastructure in place, such as the existing cable type or cabling layout. Brownfield projects are often more time-constrained too, since updates to an operating data center may involve network disruption and downtime.

When data center operators begin the process of defining the makeup of their network, there are some important practical design questions they need to answer, including general architecture and distance needs, data rate requirements, fiber types, and cost.

Design considerations

The first decision to make for a new data center network is to choose the right architecture. This involves asking questions like, “What are the business needs of the data center?" and "What are the workloads and processes that will run in the data center?” While these questions are bigger than just the physical layer, they do lead to size and speed decisions that shape the makeup of the cabling system.

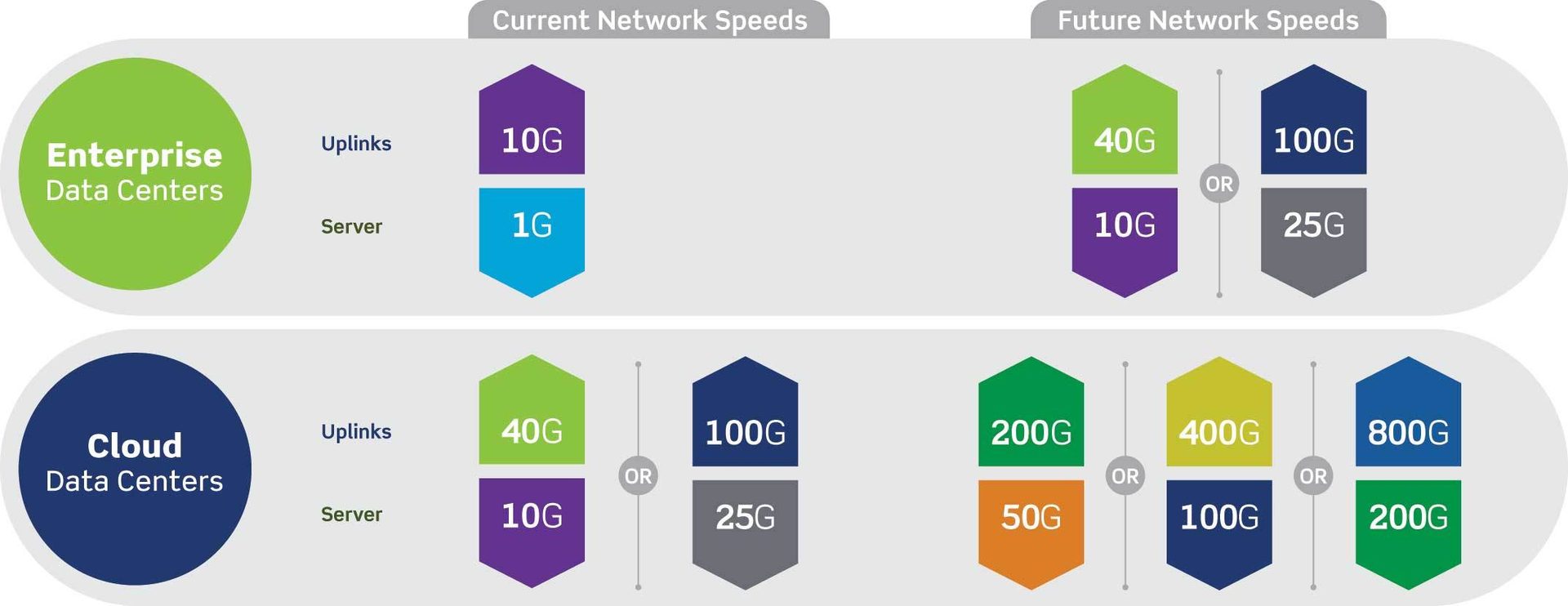

The type of data center architecture will affect the speed requirements of the network and the transceiver types most applicable to the design. Over the past 10 years, a split has formed between enterprise and cloud provider data centers when it comes to network migration patterns. For example, in previous years, cloud provider networks have operated at 40 Gb/s uplinks to the switch and 10 Gb/s from switch to server. These networks are now moving to 100 Gb/s uplinks and 25 Gb/s downlinks to the server, with some even preparing to migrate to 200 and 400 Gb/s uplinks and 50 and 100 Gb/s at the server.

Current versus future data center network configurations.

Graphics courtesy of Leviton Network Solutions

Data rate and transceiver choices

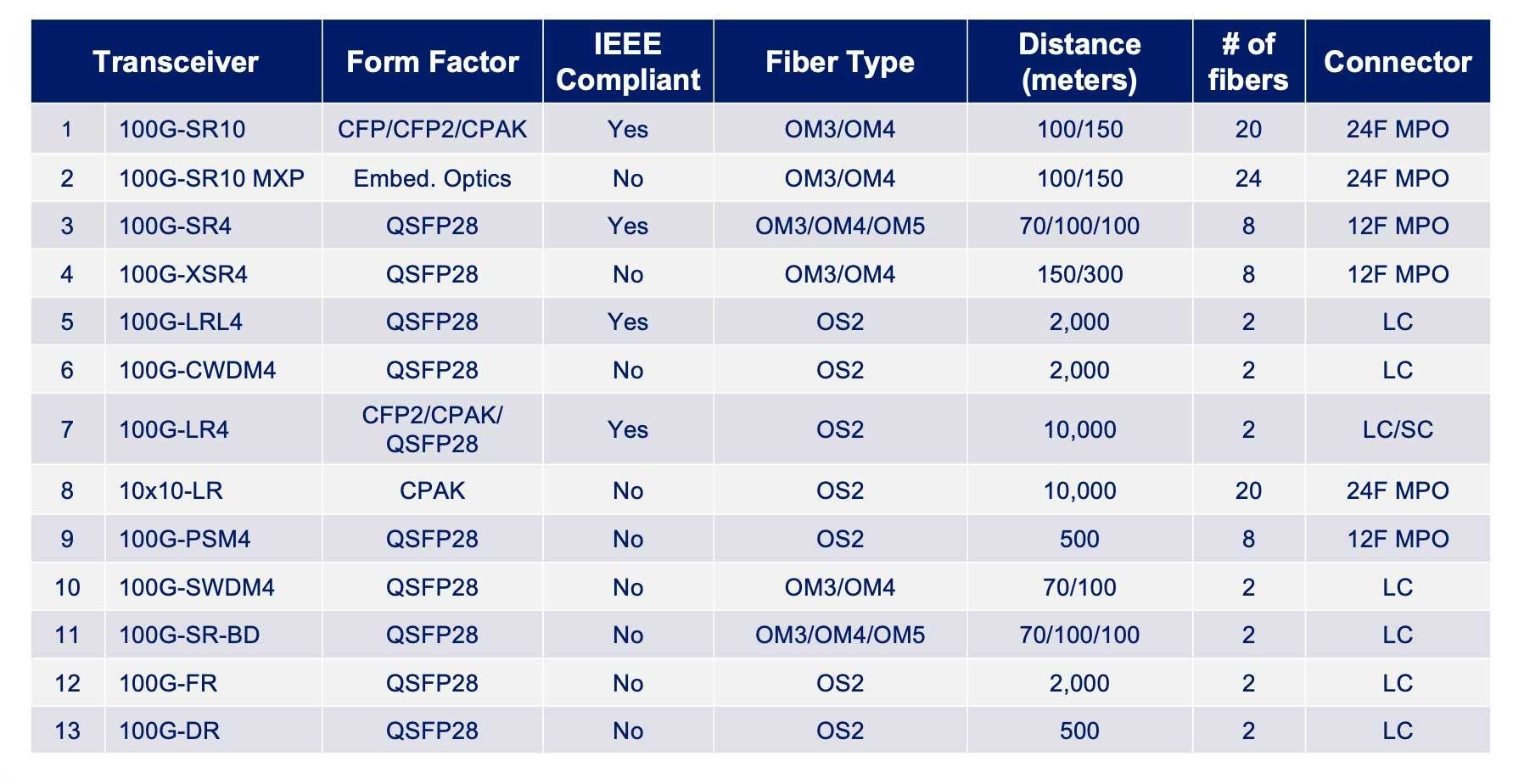

While 40 Gb/s was initially popular as a switch-to-switch connection speed, it was never popular as a server speed, and it has been losing share to higher speeds that are more economical, such as 100 Gb/s. The 100 Gb/s transceiver is on the verge of becoming the most common speed, and there are many options at that data rate. 100G-SR10, 100G-SR4, 100G-LRL4, and 100G-LR4 are the Institute of Elecrtical and Electronics Engineers (IEEE)-compliant options — the rest are all defined by multiservice agreements (MSAs), as shown in Table 1.

Master service agreements (MSAs) have created an explosion of options. For some data center managers, it is a best practice to follow existing industry standards. But, with data center demands changing so rapidly, the latest technology will often reach the market well ahead of new standards where they can be addressed. And, many cloud and hyperscale data centers have requirements for distance, cost, or custom solutions that simply don’t fit into standard specifications. Many of the MSA-developed transceivers can address these requirements with a development process that can go from thought to reality within a year. For example, 100G-SR-BD, 100G-FR, and 100G-DR were quick to market in 2021, providing breakout options to 400 Gb/s for applications both in the server and the switch.

TABLE 1: A list of 100G optical transceivers.

While there are fewer 400 Gb/s transceiver types than there are 100 Gb/s, these options continue to grow. IEEE-defined 400G-DR4 and 400G-SR8 transceivers, using MPO connections, are anticipated to become the most popular 400 Gb/s transceiver types. The most common use of the 400 Gb/s port will be to aggregate multiple 100 Gb/s downlinks. Single-mode 400G-DR4 aggregates four lanes of 100G-DR1, while multimode 400G-SR4.2 will aggregate four lanes of 100G-SR1. More MSA-driven transceiver options are in development for 400 Gb/s, and there are groups currently working on 800 Gb/s and higher speeds.

Fiber cable type and connection choices

In data center architecture, there will be different layer one network tiers, and each will have different reach requirements. For example, the transceiver and fiber cabling choices for a top-of-rack (ToR) design — which typically have short 2- to 3-meter connections — can be very different than the connections for an end-of-row or spine design.

The interconnected mesh architecture is a popular design for cloud data centers, and, in this design, every spine switch is connected to every ToR switch by running through one leaf switch. At the bottom of this architecture, the connections are much shorter and become longer when moving toward the spine and core connections at the top. The shorter reach connections from the ToR switches to servers are typically multimode fiber or even copper. The type of connections used in ToR-to-leaf switches can vary, but OM4 fiber cable is by far the most popular choice today. Single-mode OS2 fiber dominates leaf-to-spine and superspine connections, as only single-mode will meet the longer distances required.

Once data center managers choose fiber types for the new network, they can then consider connection types. Today, the large majority of data center connectors are either LC or MPO connectors.

LC duplex connectors are currently the most popular fiber connection type. The duplex connector is easy to manage from a polarity perspective, and its established popularity makes it readily available. A common question we hear is “Will LC connections work when upgrading to data rates beyond 25 Gb/s?” While there are LC solutions for connecting networks at 40 Gb/s and 100 Gb/s, the duplex options that use LC connections will typically require multiplexing technologies, like CWDM, which can raise the price of transceivers.

Moving beyond 10 Gb/s, parallel optics with MPO connections have become a popular choice. MPOs allow for breakout options that create easy connections between higher-speed ports and multiple lower-speed ports. For example, a Base-8 MPO connection delivering 100 Gb/s can breakout out to four 25 Gb/s ports at the server rack. MPO assemblies also consolidate trunk cable connections into a denser package, freeing up more pathway space and creating backbone connections that will require fewer potential changes throughout future tech refreshes. This is important, since long trunk runs from the spine-to-leaf tiers are not easily accessible after they are installed and can be time-consuming and labor-intensive to rip and replace.

Some new connector types have been introduced in recent years for addressing data rates of 400 Gb/s and higher, known as very small form factor (VSFF) connectors. These duplex connectors are used in OSFP, QSFP-DD, and SFP-DD transceivers to increase port density at switch ports over traditional LC duplex connectors. Based on the multivendor MSA groups, three VSFF variants have been incorporated. They are the CS, SN, and MDC connectors.

These new VSFF connectors are relatively new to the market, and it is currently unclear which will gain the largest market acceptance in the structured cabling space.

Typical link costs

The key to deciding on the best physical layer design will come down to choosing solutions that address the performance demands required while also being economical enough to meet fiscal budget constraints. When finding this balance, there are practical reasons for implementing different technologies at different tiers based on cost.

For example, copper or multimode fiber will often still be the technology of choice in data center rows and servers. Copper is the least expensive solution to implement but supports shorter link distances and lower maximum data rates — currently up to 10 Gb/s. Multimode can handle higher speeds and support longer distances than copper but will come at a higher cost. Single-mode optics will be the technology of choice for the spine tier of the network. In some cases, it still comes at a higher cost than multimode, but it is typically the only solution capable of delivering higher data rates, such as 100 Gb/s (and higher), at the longer link distances required.

When considering costs, network designers should always consider the cost of the entire link. For example, transceivers for parallel optics will typically cost less than duplex transceivers, but the associated parallel MPO cabling comes at a higher cost, considering it's an eight-fiber solution versus a two-fiber solution. For longer reach distances, the impact of the cable cost becomes more significant. However, in general, link costs are largely shaped by the transceivers, as they are the most expensive components. This is a key reason why transceivers are the most important decision to make first when designing the network.

When evaluating the passive infrastructure, most of the costs are in the cabling and connectivity. Components, such as splice or patch enclosures, do not add as much to the material cost of the overall structured cabling system. While the choices for patching hardware have little effect on capital expenditures, they can significantly influence operational costs, as this is the area of the network where technicians will access the cabling after installation. Questions about density, accessibility, manageability, and physical protection should be considered, as they have an impact on the efficiency of moves, adds, and changes in the future. As with fiber type and connection type, the ideal choices for enclosures, panels, or fiber cassettes may be different based on the area or tier of the data center. However, the deciding factors will have more to do with functional efficiency than product cost.

As new data centers are designed to scale out in the future to add more servers, network designers should plan for a fiber infrastructure that anticipates any higher data rates and longer distances down the line. Any greenfield data center will become a brownfield when it reaches its first tech refresh. For many data centers, that refresh cycle can occur every three to five years, so planning now will ensure the most cost-effective design with the least disruption.

Mike Connaughton, RCDD, CDCD

Mike has 30-plus years of experience with fiber-optic cabling and is responsible for strategic data center account support and alliances at Leviton. He has received the Aegis Excellence Award from the U.S. Navy for his work on the Fiber Optic Cable Steering Committee and was a key member in developing the SMPTE 311M standard for a hybrid fiber optic HD camera cable. He has participated in fiber standardization meetings with TIA, ICEA, ANSI, and IEEE.

[simonkr]/[Vetta] via Getty Images