MC // Edge

What’s Next for Distributed Edge Computing?

Data center total cost of ownership considerations and innovation at the edge

SCROLL

As the amount of data, number of devices, and new technologies continue to increase, more organizations are seeing the benefits of edge computing. According to a survey by Analysys Mason, edge computing is a top strategic priority for many operators — 30% are already in the process of deploying an edge cloud, and 57% are currently outlining their plans to do so in the next year.

The term “edge” refers to an accumulation of multiple devices that allow data processing and service delivery closer to the source. The proximity of this edge can impact the quality of services for the end user, retail sales point, traffic network sensor, mobile phone, etc. With 5G comes the emergence of applications like virtual and augmented reality (AR/VR), industrial robotics/controls, Industry 4.0, interactive gaming, autonomous driving, and remote medicine, to name a few. These applications require a latency, cost point, service availability, and capability to work at scale that simply cannot be delivered via the use of virtual machines running in a typical public cloud infrastructure. Add to this the densification of data resulting from billions of connected devices via emerging applications, and service providers are looking to edge computing to eliminate latency and congestion problems as well as to improve overall application performance running on those devices.

In 5G, we see numerous applications emerging from AR/VR (pictured above), industrial robotics/controls (pictured below), industry 4.0, interactive gaming, autonomous driving, and remote medicine, to name a few. Photo courtesy of Pixabay.

Many of these new applications require an end-to-end latency below 10 milliseconds; however, typical public clouds are unable to fulfill such requirements. While 5G holds the promise of enabling new applications and use cases to get there, Telecom operators and cloud and data center providers will have to make significant updates to their current networks. For example, Telco edge data centers have several constraints because they must fit within an existing legacy central office and, as a result, must contend with very limited space, power, and cooling as part of their infrastructure. These telco edge data centers will need to host a variety of container-based applications, namely real-time interactive applications, content delivery networks, basic networking services, mobile and fixed packet core nodes, and IoT frameworks.

Innovation at the Edge

Edge computing and the hybrid cloud are necessary components to help drive consistency across all infrastructure footprints. But a mishmash of software-defined networking has also resulted in a proliferation of virtual machines (VMs), creating the dreaded server bloat. Imagine, if you will, the added layers of code and file for routing, switching, gateways, firewalls, and more going virtual — all of this eats up the cloud’s valuable compute and storage power resources. Worse, it can also introduce an extra layer of network latency by creating circuitous pathways, redundant packets, and loads of inefficiencies that can be invisible to service providers but add milliseconds of latency to every request.

One architectural approach is to create a clear separation between the control plane and data plane — for example, leveraging containers to consolidate and offload the data plane to P4-enabled switches. “Collapsing” the data plane, not just via containerization but also by logically unifying its code into the same hardware compute and storage area, avoids “tromboning” (the meandering data path among servers) and the replication of redundant networking code. P4-enabled switches add server-like compute and storage capabilities, so the switches can then “offload” these data plane capabilities from servers to the P4-enabled switches. Not only does consolidating and offloading lead to savings in both CapEx and OpEx, there are other benefits too.

In the event of a VM failure, several virtual network functions (VNFs) demonstrate severe service disruption. Often, it takes several minutes to restart a VM after a crash. Such system behavior is not acceptable in latency-sensitive applications — consider an autonomous vehicle failure, where milliseconds of latency can result in a crash.

Additionally, integrating networking functions into the switches and running them in containers can potentially double the number of available servers. By freeing up additional servers, more capacity is available to run differentiated revenue-generating apps, like connected cars, augmented reality, streaming movies, and critical infrastructure, within the same limited space, power, and compute resources.

And, finally, P4 delivers the programmability for 5G providers to offer innovative new services on different network slices that meet the SLAs their customers require while also delivering secure, end-to-end, fully isolated networks.

At the heart of new edge architecture lies a unified solution for distributed edge computing. For the longest time, network, compute, and storage capabilities were deployed as silos, with each running its own control plane or operating system (OS). As much as automation has eased the deployment, configuration, and management of these elements, the need for common services — like zero-touch provisioning (ZTP), upgrades/downgrades, scalability on demand, and more — has generated different OSs, including network OSs. Kubernetes, for example, has become the de-facto standard for the orchestration of containers on compute. By using the same orchestration layer for network, compute, and storage, managing resources at the edge can be a simple process that leads to optimal results.

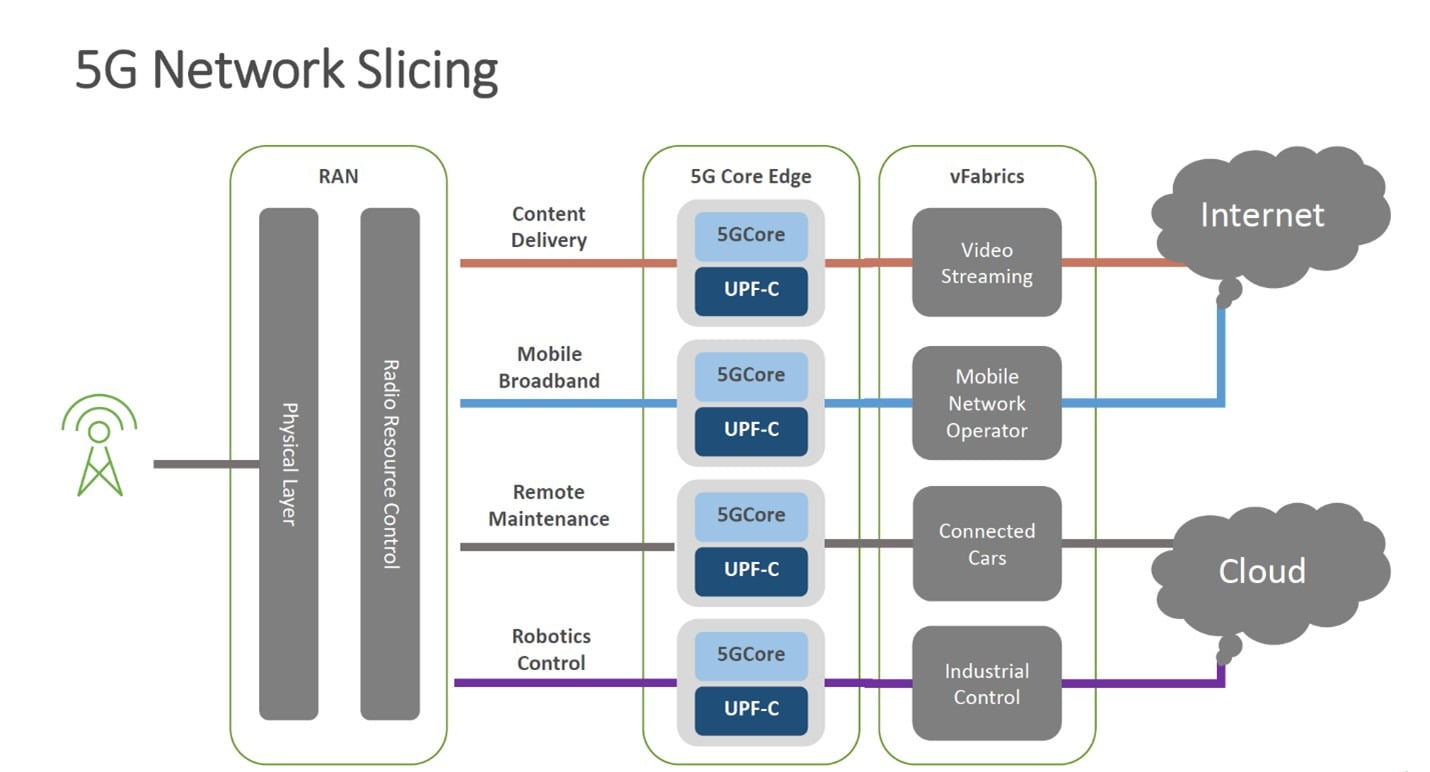

Today’s edge solutions must enable service providers to create multiple virtual data centers in support of network slicing as required by the 3GPP specifications. This partitioning is key to delivering on the promise of 5G networks. True network slicing will enable multiple operators to share a common distributed cloud infrastructure with each entity enjoying full isolation down to the hardware level for better security and a better quality of experience.

True network slicing will enable multiple operators to share a common distributed cloud infrastructure with each entity enjoying full isolation down to the hardware level for better security and a better quality of experience. Photo courtesy of Kaloom.

Planning for the Future

When it comes to 5G, businesses and service providers alike need an interoperable ecosystem of low-cost, open-source solutions that can evolve so they don’t have to keep buying new hardware every few years. However, lowering costs alone is not enough. Businesses want 5G solutions that meet very specific technical demands, are unique to them, and provide differentiation.

Building 5G networks requires a fundamental shift that emphasizes support for edge-native apps that can be delivered with high performance at the lowest possible cost. Innovations in distributed edge computing will allow network, compute, and storage nodes to share the same underlying container-based execution environment and, in turn, help to address key issues.

Hitendra “Sonny” Soni

Hitendra “Sonny” Soni is the senior vice president of worldwide sales and marketing at Kaloom. With more than 25 years of experience in data center and cloud infrastructure, he drives industry awareness about innovations in distributed cloud edge data center fabric designed for 5G, IoT, big data, and AI.

Lead Image from Pixabay