// Standards and Metrics //

Data centers need a better metric

Beyond PUE

According to the U.N.’s Intergovernmental Panel on Climate Change (IPCC), global warming is more widespread and accelerating more rapidly than previously thought. And, it is due to human activity.

For the data center industry, sustainability goals and meeting net-zero emissions targets have never been more important, but achieving these will require a paradigm shift — data center professionals need to consider new ways of approaching design.

In the first instance, the way data center efficiency is typically benchmarked needs to be reconsidered. In the second instance, data center cooling techniques should be reviewed.

For the data center industry, sustainability goals and meeting net-zero emissions targets have never been more important, but achieving these will require a paradigm shift in how we approach data center design.

Photo courtesy of Pixabay

Measuring Data Center Efficiency Today

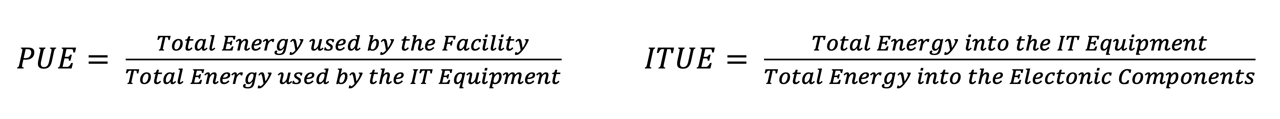

First proposed by Christian Belady and Chris Malone in 2006, power usage effectiveness (PUE) has become a globally recognized metric for reporting data center energy use efficiency. The metric provides a readily understandable means of communicating efficiency by providing the ratio between total facility power and power consumed by the IT load. In an ideal scenario, PUE would be 1 (i.e., 100% of the power delivered to the facility would be going to the IT equipment [ITE]). Hence, PUE aims to demonstrate the energy that is consumed by data center infrastructure — power and cooling — while provisioning compute power to the ITE.

Specific to each individual data center, and influenced by a range of different factors, PUE does not provide a reliable platform for comparing one facility to another. Neither does it give a good indication of environmental performance, although it has often been used in this way. Rather, PUE provides trend data whereby efficiency improvements at a particular site can be monitored for their relative effectiveness. While the PUE metric has shortcomings that have long been recognized by many within the industry, it remains widely used as an industry benchmark — it’s enduring appeal due, perhaps, to its simplicity and usefulness. But in the drive toward achieving sustainable, net-zero carbon operation, the need for the industry to adopt a more precise means of measuring energy efficiency has become increasingly acute.

Additionally, ITE is a broad church, and there are questions about how power delivered to it is consumed. While the larger proportion of IT power is generally consumed by CPUs and GPUs that perform intensive data processing operations, a significant amount is also used by the onboard fans that induce cooling air across the server components. There is a strong argument that this fan power use should really lie on the facility power side of the PUE equation. Although server fan power is supplied to the rack, it effectively contributes only to the cooling of the ITE and not data processing.

It needs to be borne in mind that while a low PUE of, say, 1.1 looks impressive on paper, the product of a data center is not the energy consumed, regardless of how efficiently this may be done, but the work performed by the compute load — the data processing output of the IT equipment. To accurately assess the overall efficiency of an entire facility, it is therefore a fundamental requirement that energy usage should be measured at the server level rather than the rack level.

While the PUE metric has shortcomings that have long been recognized by many within the industry, it remains widely used as an industry benchmark — it’s enduring appeal due, perhaps, to its simplicity and usefulness.

Photo Courtesy of Pixabay

Is ITUE a Better Metric for Sustainability?

As described above, PUE is an incomplete metric in that it considers only the power delivered to the rack rather than the use to which that power is put. However, around 10 years ago, Michael Patterson from Intel Corp. and the Energy Efficiency HPC Working Group (EEHPC WG), et al., proposed two alternative metrics: IT power usage effectiveness (ITUE) and total power usage effectiveness (TUE).

The purpose of ITUE, as its name suggests, is to give an indication of PUE for the ITE rather than for the data center. It effectively provides a PUE for the equipment rack by dividing the total energy supplied to the IT equipment by the total energy that is delivered to the electronic components. Hence, ITUE accounts for the impact of rack-level ancillary components, such as server cooling fans, power supply units, and voltage regulators, which can consume a significant proportion of the energy supplied.

However, when attempting to assess overall energy efficiency of the data center, ITUE also has its shortcomings, as power demands external to the rack are not considered. This can be addressed by multiplying ITUE (a server-specific value) and PUE (a data center-specific value) to obtain TUE. Although TUE has been around for more than 10 years, it has gone mostly unnoticed, adopted by just a small percentage of the industry. Yet, the metric holds the possibility of a more precise indication of overall data center energy performance.

Though the debate continues about creating new metrics, raising awareness of TUE and promoting it as an industry benchmark standard for data center energy efficiency could be valuable in helping data center operators implement improvements in performance.

TUE in Action

Since the biggest single use of energy in any data center is the ITE, implementing solutions that yield improvements at the rack level must be among the first steps taken toward achieving net-zero carbon targets.

Take the way cooling is delivered to the processors — since inception, the data center industry has focussed on engineering secure and controlled environmental conditions within the technical space. Practically every data center manager is familiar with the principles of air cooling, which has been the predominant strategy for many years. Over the years, the traditional chiller and CRAH approach has been augmented by new methods of delivery, including direct economization and in-direct air cooling with evaporation. Different containment systems have been devised, and the ASHRAE TC 9.9 Thermal Guidelines have been broadened. All these measures have driven incremental improvements in data center energy efficiency.

The challenge ahead, however, is twofold. Firstly, it's difficult to see how the energy efficiency of air cooling can be further improved by any significant degree. Deltas can be widened, and supply temperatures and humidity bands marginally increased, but the fundamental physics of air as a cooling medium are what they are. Secondly, as already identified by the high-performance computing (HPC) community, amongst others, the relentless rise in rack power means that air cooling has run out of road, so to speak, as an effective strategy for removing heat from the technical space. The amount of air needed to dissipate heat from increasingly common high-density loads is creating practical challenges. It's difficult to see how air-cooling strategies could be effectively deployed to meet increasing rack power and, at the same time, provide significant improvements in energy efficiency.

Increases in rack power density are not just the consequence of emerging and esoteric applications but are the result of a growing trend toward the inclusion of AI or ML capabilities within a wide range of software applications and the rapid expansion of graphical streaming services, all of which are driving a requirement for higher-power CPUs and GPUs.

Air cooling is simply no longer able to meet the challenge and is becoming outdated by the changing demands of the hardware it serves. Higher power densities are compelling IT leaders to innovate. As we’ve witnessed in the data center press recently, hyperscalers and internet giants, such as Microsoft, are already advanced in their experimentation with liquid immersion cooling and are reaping the benefits of increased energy efficiency.

The level of granularity provided by TUE gives a better understanding of what is taking place inside both the facility and the rack. This highlights an important attribute of immersion cooling architecture — namely the comparatively small proportion of server power that is required for parasitic loads, such as server fans, once it is deployed.

For example, in a conventional 7.0-kW air-cooled rack, as much as 10% of the power delivered to the ITE is consumed by the server and PSU cooling fans. Additionally, some data center operators positively pressurize the cold aisle to assist the server fans and achieve more effective airflow through the rack. However, there are limitations as to how far this strategy can be exploited before it ceases to be beneficial. By comparison, the pumps required to circulate dielectric fluid within a precision immersion liquid-cooled chassis draw substantially less power.

Liquid as a Cooling Medium

The heat-removal properties of dielectric fluids are of an order magnitude much greater than that of air, and the amount of power needed to circulate enough fluid to dissipate heat from the electronic components of a liquid-cooled server is far less than that needed to maintain adequate airflow across an air-cooled server of equivalent power. Further, the comparatively higher operating temperatures of many facility water systems (>113v°F for ASHRAE Cooling Class W5) that serve liquid-cooled installations is such that reliance upon energy-intensive chiller plants may be reduced or avoided altogether, further improving facility TUE.

By adopting liquid cooling, data center operators and owners can potentially drive up efficiencies at all levels in the system, achieving worthwhile improvements in energy efficiency. Increasing rack power density free from the constraints increased cooled-air movement would also allow for more compact data centers. On their own, these measures will not achieve net-zero carbon but could be a significant step along the way.

Breaking the Status Quo

Deloitte’s digital transformation report cites the failure to change as “often characterized by a lack of urgency, a reluctance to adopt, a fear of change, and a lack of clarity about ‘where we’re headed and why’.” It’s human nature to stick with what you know, never more so than in a mission critical sector, like the data center industry, which, by its nature, is risk-averse and conservative.

Driving Change

Driven by the climate emergency, there is an urgent need to adopt both better energy efficiency metrics and cooling solutions. But data center professionals also need to challenge hardware design practices. Currently, the industry is geared around air-cooled servers. However, once hardware manufacturers start optimizing equipment for liquid-cooled environments, there is the potential to realize additional rack space and power utilization as well as sustainability gains across the industry. Adapting existing air-cooled facilities to support liquid-cooled racks could prolong their useful working lives, potentially increase their capacities, and avoid the cost and carbon impact of constructing new buildings.

While some transitions, such as new metrics and optimized server designs, will take time, there is no doubt about the efficacy of liquid cooling, according to Cundall’s research. Ecosystems, such as that between Iceotope, Schneider Electric, and Avnet, enable servers from manufacturers, including HPE and Lenovo, to be easily deployed in existing IT environments both within data centers and distributed edge environments. Initiating the transition to liquid-cooling now will start the process of reducing the environmental impact of compute services sooner.

Malcolm Howe

Malcolm Howe BEng (Hons), CEng, MCIBSE, MIEI, MASHRAE, is a partner at Cundall.